A while ago, in a grump, I tweeted something along the lines of “I’m fairly convinced that most social scientists who write about algorithms do not understand what that term means”… Provocative I know, but I was, like I said, in a grump. Rob Kitchin tweeted me back saying that he looked forward to the blogpost – well here it is – finally.

I’m afraid its not going to be a well-structured and in-depth analysis of what one may or may not mean by the word algorithm because, well, other people have already done that. What I want to offer here is an expression of the anxiety that lay behind my grumpy tweet and means of mitigating that. So, this is a post that uses other people’s work to address:

- what is an algorithm?

- how/should we analyse/study them?

To be totally open from the start, my principle sources here are:

- Paul Ford’s lovely (very!) extended essay ” What is Code?” (see in particular Section 2.4)

- Tarleton Gillespie’s brilliant “Algorithm” entry for the #digitalkeywords theme on the Culture Digitally site.

- Rob Kitchin’s excellent synthesising working paper “Thinking critically about and researching algorithms“

So, what do we mean when we use the word ‘algorithm’?

To begin with a straight-forward answer from Paul Ford:

“Algorithm” is a word writers invoke to sound smart about technology. Journalists tend to talk about “Facebook’s algorithm” or a “Google algorithm,” which is usually inaccurate. They mean “software.”

Algorithms don’t require computers any more than geometry does. An algorithm solves a problem, and a great algorithm gets a name. Dijkstra’s algorithm, after the famed computer scientist Edsger Dijkstra, finds the shortest path in a graph. By the way, “graph” here doesn’t mean

but rather

Or, as Rob Kitchin notes in his working paper it is (following Kowalski) “logic+control“, such that (citing Miyazaki) the term denotes a form of:

specific step-by-step method of performing written elementary arithmetic… [and] came to describe any method of systematic or automatic calculation.

This answers a simple definitional question: “what is an algorithm?” but doesn’t quite answer the question I’ve posed: “what do we mean when we use the word algorithm?”, which Ford gestures towards when suggesting journos (and one might include a lot of academics here) use the word ‘algorithm’ when they mean ‘software’. As Gillespie notes

there is a sense that the technical communities, the social scientists, and the broader public are using the word in different ways

There isn’t a single/singular meaning of the word then (of course!) and after decades of post-structuralism that really shouldn’t be a surprise… nevertheless there is a kind of discursive politics being performed when we (geographers, social scientists etc etc) invoke the term and idea of an ‘algorithm’, and we perhaps need to reflect upon that a little more than we do. I may be wrong, perhaps we just need to let the signifier/signified relation flex and evolve – my main motivation for addressing this question is that I think we do already have useful words that address what is being suggested in the use of the word ‘algorithm’ – amongst these words are: code, function (as in software function), policy, programme, protocol (e.g. Ã la Alexander Galloway), rule and software.

What is salient about the technical definition of an algorithm to how we might use the word more broadly is the sense of a (logical) model of inferred relations between things formally defined in code that is developed through iteration towards a particular end. As Gillespie notes:

Engineers choose between them based on values such as how quickly they return the result, the load they impose on the system’s available memory, perhaps their computational elegance. The embedded values that make a sociological difference are probably more about the problem being solved, the way it has been modeled, the goal chosen, and the way that goal has been operationalised.

What quickly takes us away from simply thinking about a function defined in code is that the programmes or scripts upon which the algorithms are founded need to be validated in some way – to test their effectiveness, and this involves using test data (another word that has become fashionable). The selection of the data also necessarily has embedded assumptions, values and workarounds which, as Gillespie goes on to suggest: “may also be of much more importance to our sociological concerns than the algorithm learning from it.” The code that represents the algorithm is instantiated either within a software programme – a collection of instructions, operations, functions etc. etc. – that is bundled together as what we used to call an ‘application’ or it might exist in a ‘script’ of code that gets pulled into use as and when – for example: in the context of a website. As Gillespie argues:

these exhaustively trained and finely tuned algorithms are instantiated inside of what we might call an application, which actually performs the functions we’re concerned with. For algorithm designers, the algorithm is the conceptual sequence of steps, which should be expressible in any computer language, or in human or logical language. They are instantiated in code, running on servers somewhere, attended to by other helper applications (Geiger 2014), triggered when a query comes in or an image is scanned. I find it easiest the think about the difference between the “book” in your hand and the “story” within it. These applications embody values as well, outside of their reliance on a particular algorithm.

This is often missed when algorithms are invoked, all-too-quickly, in the discussion of contemporary phenomena that involve the use of computing in some way. Like Kitchin says in his working paper:

As a consequence, how algorithms are most often understood is very narrowly framed and lacking in critical reflection.

Indeed, I’d go further, there’s a weird sense in which some discussions of ‘algorithms’ connote the dystopian sci-fi of The Matrix, or The Terminator. Ian Bogost has gone so far as to suggest that this is a form of ‘worship’ of the idea of an ‘algorithm’, suggesting

algorithms hold a special station in the new technological temple because computers have become our favorite idols.

And as Gillespie noted in an earlier piece:

there is an important tension emerging between what we expect these algorithms to be, and what they in fact are

Yet, as with production of religious texts, there are people making decisions every step of the way in the production of software. On top of that, there were decisions made by people in all of the steps of the development and production of the other technologies and infrastructures upon which that software rely.

What we mean when we use the word ‘algorithm’ is, as Gillespie argues (much better than I), a synecdoche: “when we settle uncritically on this shiny, alluring term, we risk reifying the processes that constitute it. All the classic problems we face when trying to unpack a technology, the term packs for us”.

Calling the complex sociotechnical assemblage an “algorithm” avoids the need for the kind of expertise that could parse and understand the different elements; a reporter may not need to know the relationship between model, training data, thresholds, and application in order to call into question the impact of that “algorithm” in a specific instance. It also acknowledges that, when designed well, an algorithm is meant to function seamlessly as a tool; perhaps it can, in practice, be understood as a singular entity. Even algorithm designers, in their own discourse, shift between the more precise meaning, and using the term more broadly in this way.

How should we study algorithms?

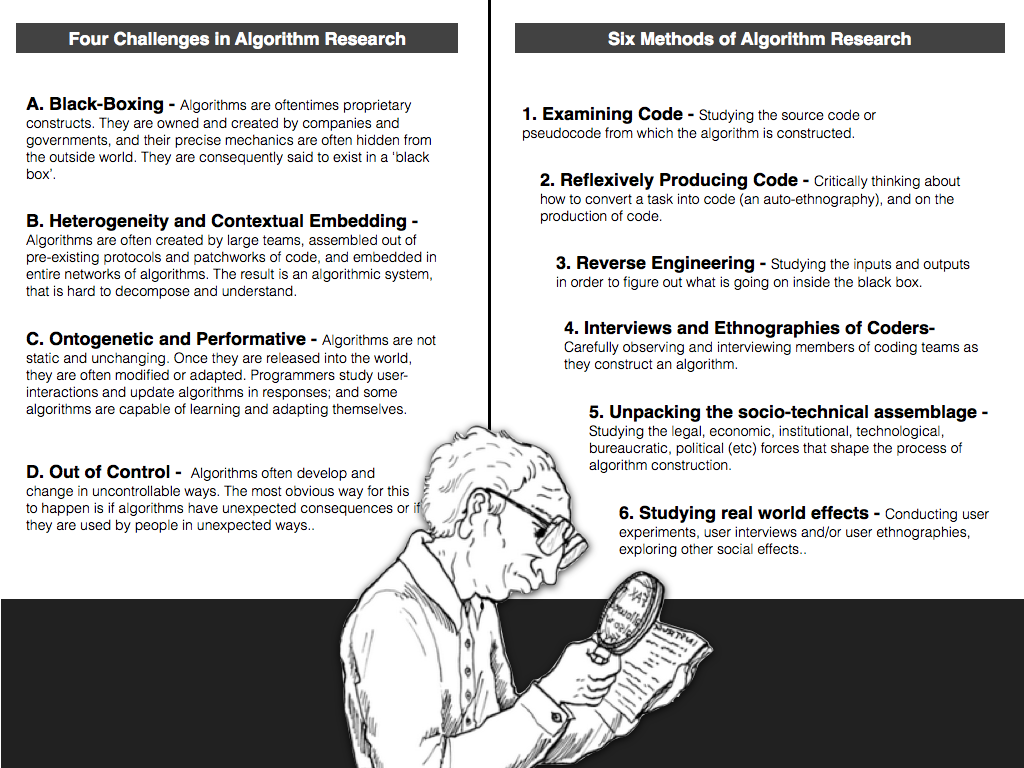

Here, I’m not totally sure I can add much to what Kitchin has written in his working paper. This has been adroitly summarised by John Danaher on his blog, and he produced this brilliant graphic:

We need to be alive to the challenge that most software we may be interested in is proprietary, although not always and we can look to repositories like GitHub for plenty of open source code, and so it may actually prove impossible to gain access to the code itself –– and here we’d really want the code of the whole programme, and perhaps the training data too. Likewise, software like any complex endeavour may well be the result of collective authorship and maintained by lots of different people. So there are complex sets of relations between people, laws, protocols, standards and many other considerations to negotiate – they are contextually embedded. Furthermore, the programmes we’re calling algorithms here actually have a hand in producing the world within which they exist –– they may bring new kinds of entities and relationships into existence, they may formulate new and different forms of spatial relation and understanding. In this sense they are ontogenetic and performative. Added to this, once ‘in the wild’ this performativity can render the kinds of outcomes of a programme unexpected and peculiar, especially once it is fed all sorts of data, adapted and adopted in unexpected ways.

How can we go about studying these kinds of socio-technical systems then? Well, rather than treat as discrete the six techniques offered by Kitchin (summarised above) – I’d argue we need to combine most of these. Even so, it may prove extremely difficult to actually gain access to code. Further, even if one might reflexively produce code and/or attempt to reverse engineer software –– some systems are the product of companies with such extensive resources that it may well prove near-impossible to do so. Where social scientists might find more traction and actually be able to make a more valuable contribution is, as Kitchin suggests, looking t the full sociotechnical assemblage. We can look at the wider institutional, legal and political apparatuses (the dispositifs) and we can certainly look at the various kinds of relation the assemblages make and how they are enrolled in performing the world they inhabit.

Make no mistake – this kinds of research is necessarily hard. I’m not sure I can imagine papers in geography journals that tie the a/b testing logs of experiments in how a given system works and commit logs to version control systems (even if you could access them!) to particular forms of experience and/or their political consequences… but it might be worth a go. Perhaps this difficulty is why we see (and I am as guilty as any of this) papers written in abstract terms, focusing on the (social) theoretical ways we can talk about these sociotechnical systems in broad terms.

Like Bogost, I can see a kind of pseudo-theological romancing of the ‘algorithm’ and the agency of software in much of what is written about it, and its sort of easy to see why – it is so abundant and yet relatively hidden. We see effects but do not see the systems that produce them:

Algorithms aren’t gods. We need not believe that they rule the world in order to admit that they influence it, sometimes profoundly. Let’s bring algorithms down to earth again.

The algorithm as synecdoche is a kind of ‘talisman’, as Gillespie argues, that reveals something of what Stiegler calls our ‘originary technicity’ – the sense in which we (humans) have always already been bound up in technology and its the forgetting and then remembering of this that forges our ongoing reconstitution (transindividuation) of ourselves.

So, I’d like to end with Kitchin’s argument:

it is clear that much more critical thinking and empirical research needs to be conducted with respect to algorithms [and the software and sociotechnical systems in which they are necessarily embedded] and their work.

Addendum:

It is worth noting that both Gillespie and Kitchin draw on another paper that is very much worth reading by Nick Seaver, who reminded me of this on Twitter, take a look:

“Knowing Algorithms“, presented at Media in Transition 8, Cambridge, MA, April 2013 [revised 2014].